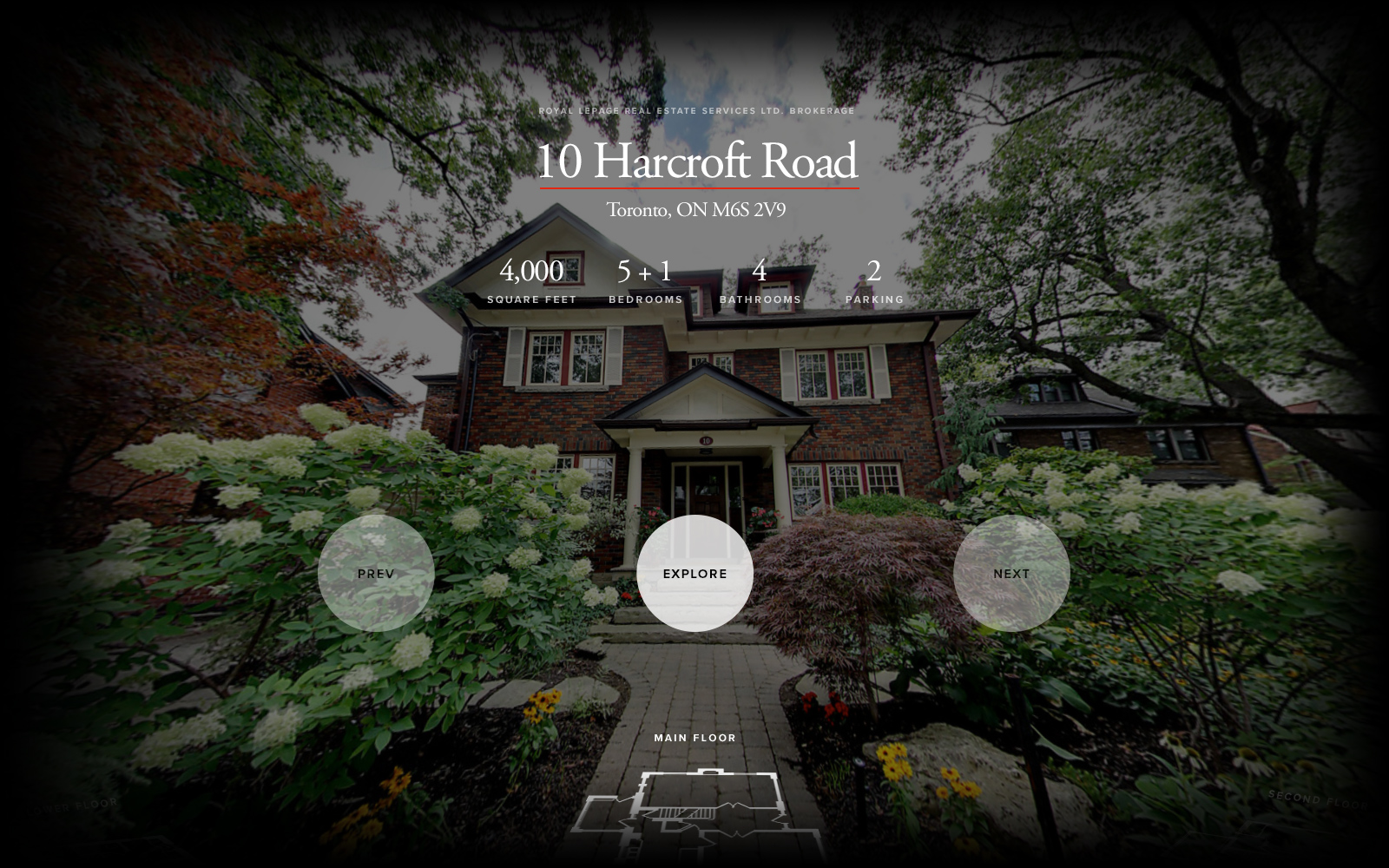

In an industry that has always been home to fragmented and inconsistent experiences, Realvision strives to make real estate simple, beautiful and effective. As a technology company, they partner with professional photographers and agents to improve their services by creating the tools and platform for an engaging, holistic digital experience. A single photo shoot gives photographers not only the high quality still images, but also generates a 3D tour and dimensioned floor plan.

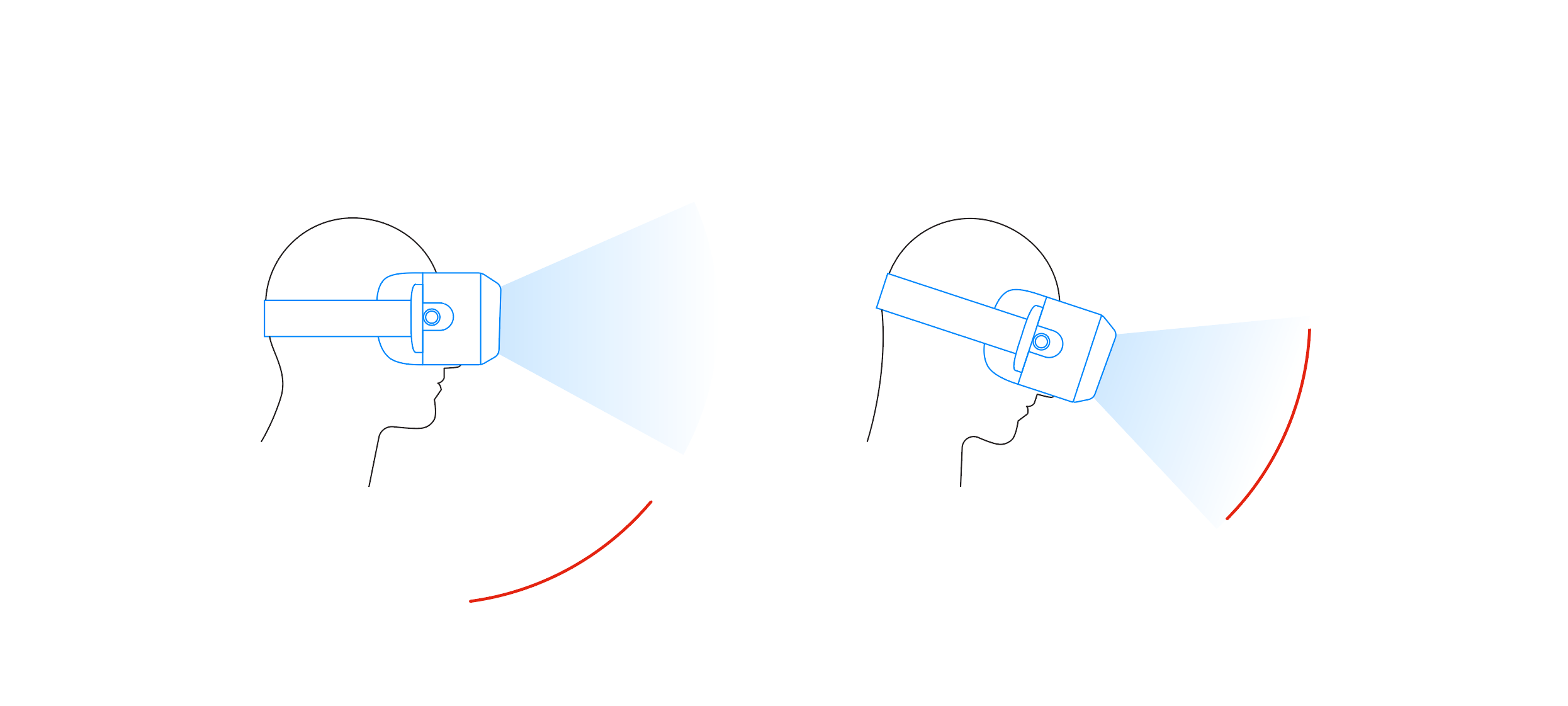

But a walk-through tour in a web browser can only be so engaging. What if we could go one step further, and fully immerse our users in the experience? By integrating the full experience into a VR environment, we’re able to pull users directly into properties, allowing them to walk through a home without ever taking a step.

VR has been a rapidly evolving field of study for user research and behavior. It was an exciting exercise to explore such a wild frontier from an interaction perspective.